The functions get_demand() and

get_occupancy() have time-series calculations that are

repeated on a daily basis. A 24-hour period is considered an independent

optimization window, thus the corresponding time-series calculations can

be done in parallel.

At the same time, in computer science, a common practice to reduce

computation times is parallel processing when the CPU

of the computer has multiple cores that can work independently. We can

discover the number of cores in our computer CPU using function

parallel::detectCores():

n_cores <- parallel::detectCores()

print(n_cores)## [1] 14In this case, the CPU used is the Apple M4 Pro, which features 10

performance CPU cores (P-core) running at up to 4.5 GHz along with 4

efficient cores (E-core) running at up to 2.6 GHz. So, when

parallel::detectCores() returns 14, macOS is exposing 14

logical cores — but not all of them are equal in speed.

In evsim, parallel processing is used inside

get_demand() and get_occupancy() functions,

using the mirai package and its

mirai::daemons() function to set the number of cores that

we want to work in parallel. Below you will find an example about how to

use this functionality.

Time-series EV demand calculation

Package evsim provides a sample data set of California

EV sessions from October 2018 to September 2021. If we filter sessions

corresponding to 2019, we have >15.000 sessions, with an average of

70 charging sessions during working days.

sessions_2019 <- evsim::california_ev_sessions_profiles %>%

filter(year(ConnectionStartDateTime) == 2019)Let’s use these real charging sessions to calculate their time-series

demand with get_demand() function, using multiple cores

(with mirai::daemons()) but also different values of number

of days (optimization windows):

n_days_seq <- c(3, 7, 15, 30, 120, 365) # Days in a year

n_cores_seq <- c(1, seq(2, 10, 2)) # 10 performance cores (P-core)

cores_time <- tibble(

days = rep(n_days_seq, each = length(n_cores_seq)),

cores = rep(n_cores_seq, length(n_days_seq)),

time = 0

)

for (nd in n_days_seq) {

message(nd, " days ---------------- ")

sessions <- sessions_2019 %>%

filter(date(ConnectionStartDateTime) <= dmy(01012019)+days(nd)) %>%

evsim::adapt_charging_features(time_resolution = 15)

for (mcc in cores_time$cores[cores_time$days == nd]) {

message(mcc, " cores")

# Define daemons

if (mcc > 1) {

mirai::daemons(mcc)

}

# Execute calculations

results <- system.time(

sessions %>%

get_demand(by = "Profile", resolution = 15)

)

# Reset daemons

mirai::daemons(0)

cores_time$time[

cores_time$cores == mcc & cores_time$days == nd

] <- as.numeric(results[3])

}

}

# Adapt variables for a better plot

cores_time <- cores_time %>%

mutate(

days = factor(

paste(days, "days"),

levels = factor(paste(n_days_seq, "days"))

),

cores = factor(cores)

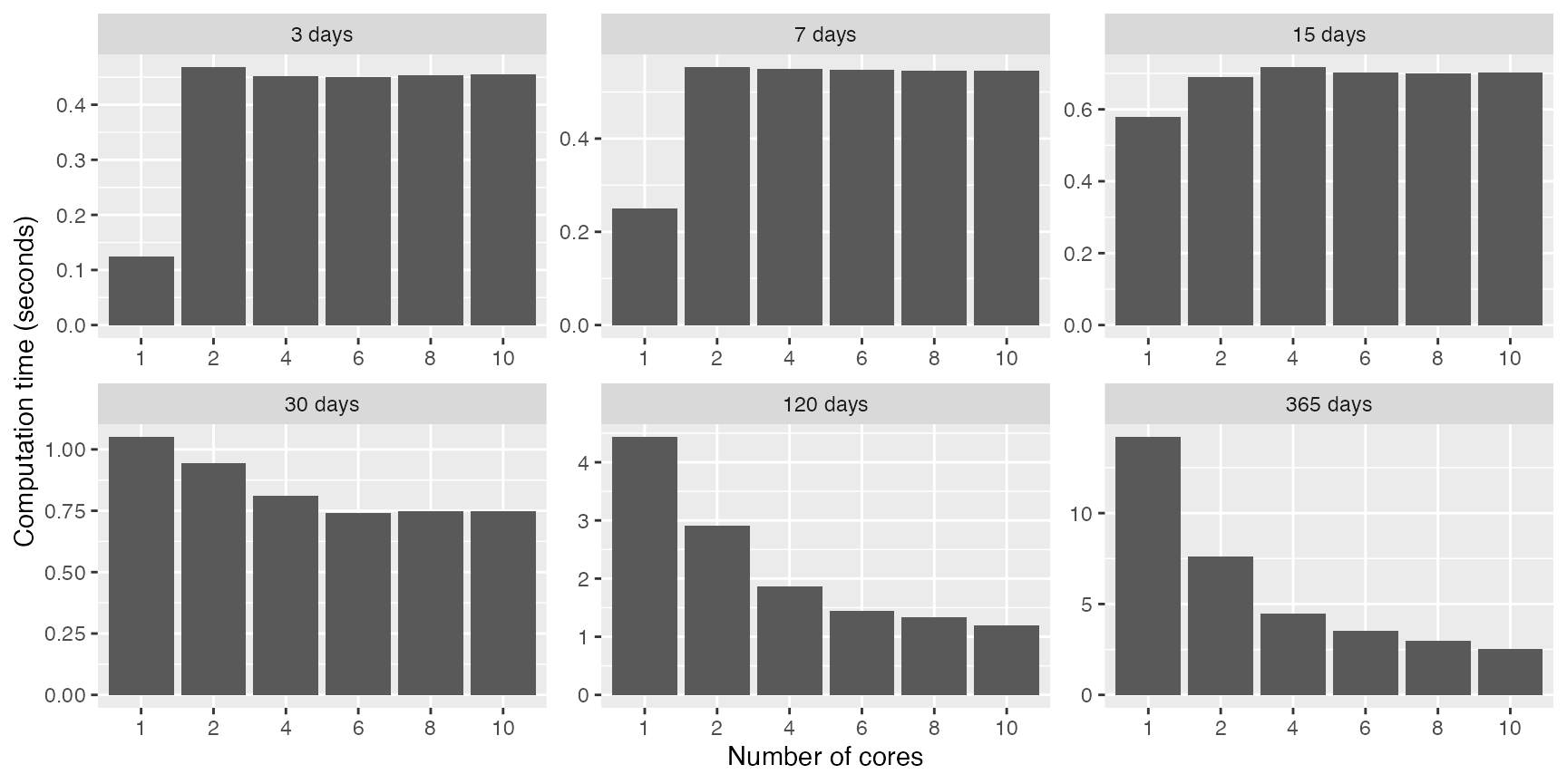

)The plot below shows that:

- Parallel processing is worth it from more than 1-month data sets (at least for this case with ~70 charging sessions per day)

- From 6 cores the reduction in computation time is not very relevant

cores_time %>%

ggplot(aes(x = cores, y = time)) +

geom_col() +

facet_wrap(vars(days), scales = "free", nrow = 2) +

labs(x = "Number of cores", y = "Computation time (seconds)")